2010

What happens when you have several rows of server racks co-located at a data center that loses their lease? You spend the majority of a year figuring out how you are going to move several rows of racks filled with equipment across the country over a Labor Day weekend.

In 2020, this problem would probably result in a basic migration to Azure, AWS, or cloud provider of choice. Worst case there's higher than usual billing for a month or two, but more likely it ends up being cheaper to host in the cloud and the net result is a cheaper hosting bill. In 2010, there was still widespread debate on if you should virtualize your SQL, Exchange, or other high volume workloads (you could!). Cloud was still a dirty word inside of security circles, and some of my fellow engineers would tell me they felt all this stuff was likely a fad. Bottom line, people still really trusted bare metal hosting, and a struggling economy was no time to try anything cute.

If you have any fortune at all, DR plans are a formality and an annual exercise. We executed ours.

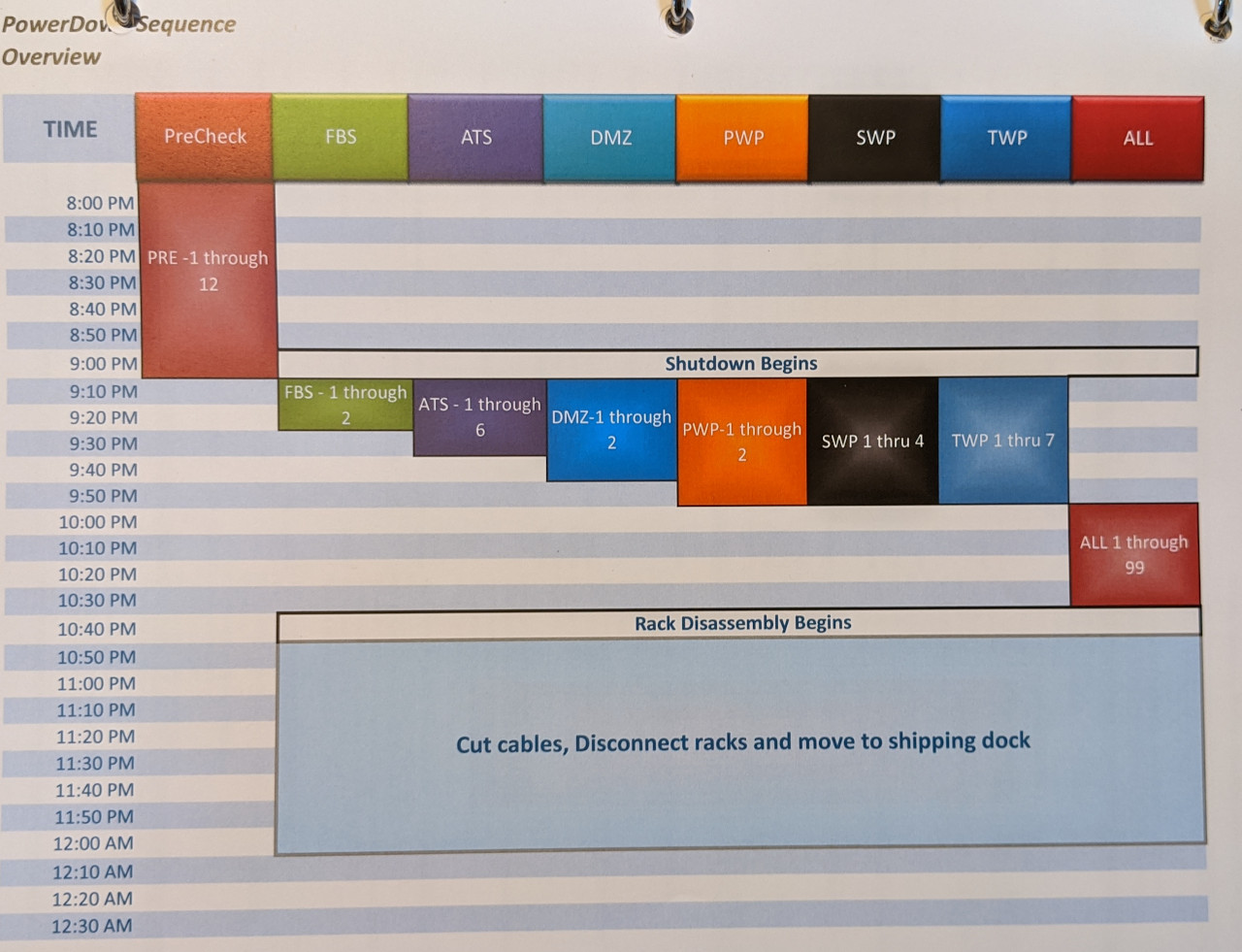

The engineering team was physically located in Chicago, Atlanta, and Houston. The facility that was being shut down was located in Atlanta, and the destination for the racks was in Dallas. This whole project required flying most of the engineering team to Atlanta, and then having them disconnect and roll fully loaded racks into the back of trucks destined to Dallas. Most of the team would then take a mix of flights to Dallas to unload the same things they just racked up in Atlanta. Some of the team had the honor of participating in a several car envoy surrounding the trucks. Truth is stranger than fiction, but some people I worked with on this project can actually list technical support of an armed envoy on their list of credentials. Myself and a few others stayed in our respective cities until the cords were about to be cut, and then executed our disaster recovery plans before boarding flights straight into Dallas.

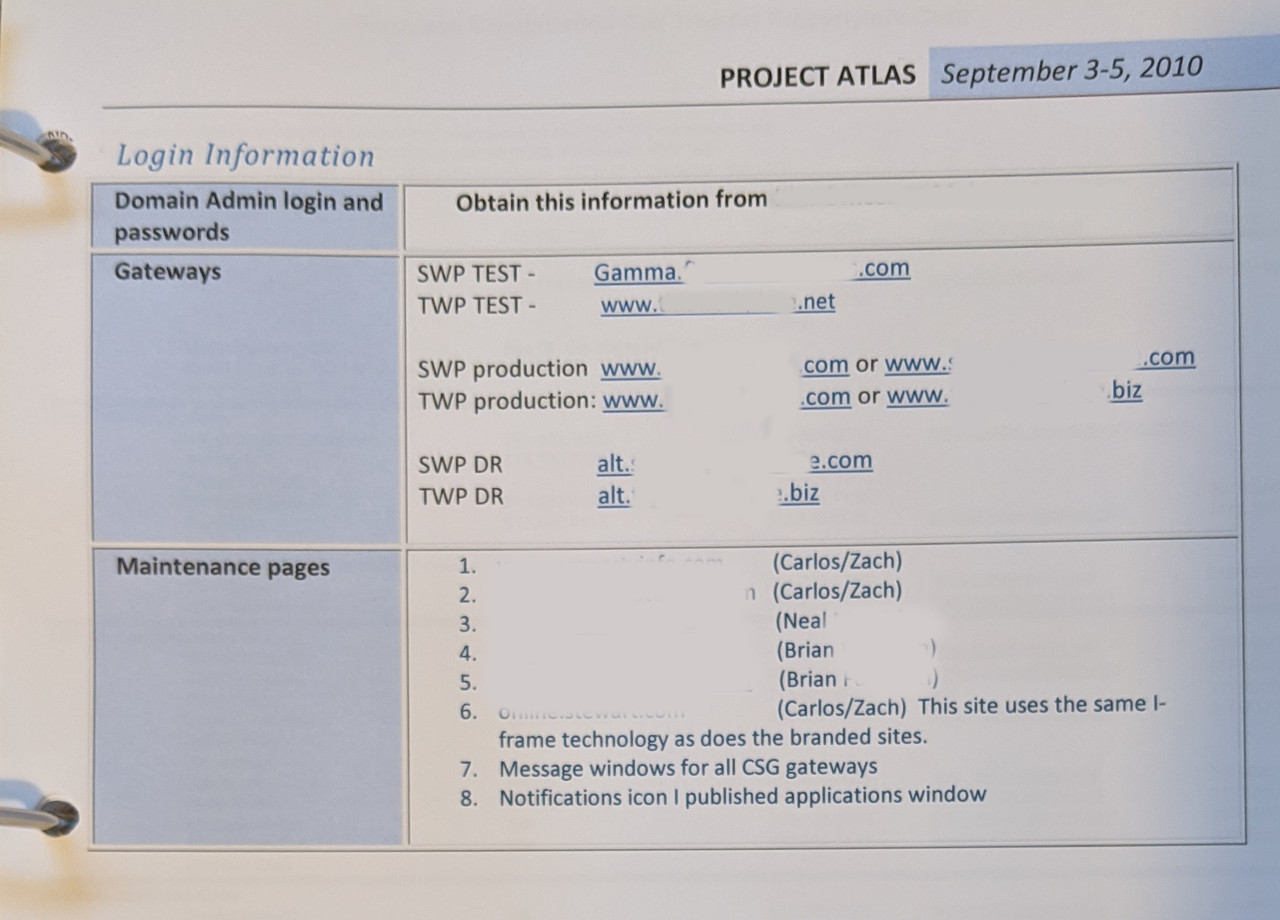

Even at the time, this whole plan seemed really absurd to everybody involved. The circumstances that made paying for a full team to travel, hiring a security team, and hiring multiple truck drivers cheaper than just buying and installing new equipment at the new facility really was a product of the recession. We were too broke to buy all new equipment, and everybody else was broke too so the rates on all those services were bottom of the barrel. Concerns were brought up and discussed on weekly calls, and twice weekly trips were made to both Atlanta and Dallas to ensure every single cable and receiving socket had a colored and numbered label affixed to it. The plan was essentially to unplug everything, and then plug it all in exactly as it was. Cable for cable, port for port, and to do it in a way that didn't require any one person to be an export on their assigned racks in case any assignee ended up on a delayed flight or were otherwise unable to attend.

This project forced me to learn how to implement an active/active environment. We had a staging center in Houston that for the purposes of this project I converted into low volume production. That environment didn't have the horsepower to sustain 100% of our peak production usage all by itself, but it could feasibly handle 80% which was plenty to deal with a holiday weekend worth of B2B traffic. About a week before the move I was able to use F5's and basic DNS round robin to geolocate the traffic across both locations. When the move commenced I put a maintenance page up mostly to cover the various sites and services that for one reason or another couldn't run while their primary equipment was in transit.

The move itself went off largely as planned. A whole complex network that was complete with a public class C subnet just picked up and moved across the country in a weekend. There was far more downtime in literally driving the equipment between facilities than in plugging it all in and firing it back up. I was impressed that creating an active/active DR environment was feasible, but I was even more impressed at the sheer effectiveness of thousands of well crafted labels on cables. This is the event in my career that lets me take real joy in browsing r/cableporn (safe for work).